Are you vacillating between ETL or data warehouse automation tools Data-Warehouse-Automation-Tools and a "do-it -all-yourself" approach? Then you should definitely become familiar with dbt! It takes over all routine work easily without "getting in the way" and can be learned in a very short time – and it's open source. Ideal in conjunction with a cloud DB such as Snowflake. As such it comes as no surprise that for this kind of technology stack – which put emphasis on the data value chain using efficient and easy-to-operate technologies – the term “Modern Data Stack” is on the rise.

Modern BI architecture

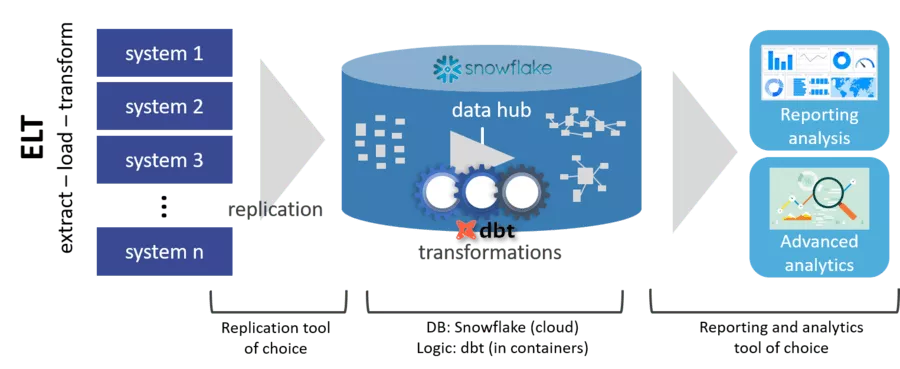

Until a few years ago, a common practice when configuring data hubs was to implement ETL (extract-transform-load) paths which performed data transformations en route from the source system to the target database. Used today is the more modern ELT (extract-load-transform) approach, in which the source data are first loaded (replicated) in raw form into the target database without any transformation - which takes place only later.

The reasons for this are obvious:

- Storage of these data (ultimately a replica of previous systems) has become inexpensive.

- The loading step (extract-load) is simple compared to later transformation, and can therefore be implemented with little effort, if necessary, using a dedicated tool.

- As a data lake, therefore, much more raw data can initially be loaded, than if transformed immediately (comparatively elaborate) in the downstream data hub. One also speaks here of a "staging area"

- The actual transformation performs best if it takes place in the same database.

- Maintenance and troubleshooting are greatly simplified, as the stored raw data always remain available for use – even if the logic changes retroactively, for example.

Best-of-Breed with dbt und Snowflake

Now dbt („Data Build Tool“) as typical part of the "best-of-breed" approach of the Modern Data Stack comes into play:

dbt only deals with transformations (the "T" in "ELT") and operates exclusively on the target database.

The (comparatively simple) step of loading ("EL") from the source systems to the target database must be carried out separately. Available for this is a wide choice of tools - purely cloud-based or with on-premise installation. Connectors to many systems and databases are often present too. Some available options are:

- Stitch

- Fivetran

- SymmetricDS

- Qlik Replicate (formerly Attunity Replicate)

- Or - often not the worst choice - a few lines of your own Python code over which you have full control.

Available as a match for the very rapidly deployable dbt is a cloud database which can also be started up quickly – and then performs brilliantly: Snowflake.

Does what it should - quickly and straightforwardly

dbt itself is open source and can therefore be used entirely free of charge on Windows as well as Linux. It is an easy-to-install command-line application. A complete load is triggered, for example, with the following command:

dbt run

There is also a moderately priced cloud variant which comes with its own browser-based IDE (development environment).

The salient features of dbt are:

- Lightweight, non-intrusive, very well documented.

- Developer-friendly: Very quickly learned and deployed, suitable for DevOps.

- Push-down: SQL commands are moved to the database and executed there efficiently and locally.

- Historicization (SCD2) out-of-the-box, easily configurable.

- Also included: Logging, testing framework, and generation of web documentation (incl. lineage).

- Perfectly harmonized with a version control system such as Git and CI/CD methods.

- Designed for batch and, if necessary, micro-batch processing.

- Can be integrated into any job scheduler, or triggered in cloud functions such as Azure functions.

- Best of all: Data engineers concentrate on the transformation logic in SQL (very similar to nested DB views) — the rest is automated by dbt.

Snowflake also provides a number of advantages suited to dbt:

- Analytical database (column-based) with very high performance.

- Completely cloud-based and set up in minutes (also offers a free 30-day trial version).

- Hosting with the following options: Microsoft Azure, Google Cloud Platform, AWS.

- Separate billing according to used storage and computing time.

- Maintenance-free and easy to use; not even DB indices are present, for example.

- Auto-sleep: DB can go to sleep automatically after an adjustable inactivity time, and does not generate computing-time costs.

- Zero-copy cloning, making it possible to create a copy of the complete database in seconds: For example, for integration tests or developer versions.

- Virtually all reporting systems such as Power BI, Tableau, Qlik, etc. support Snowflake as a database, as does the typical data-science tool stack

As mentioned above, dbt also supports databases other than Snowflake. Important for us:

With the very short training time and virtually free features of dbt, one should carefully think twice, in particular, about a self-made - however small - ELT framework (even if all developers like to do this

For today's data engineers

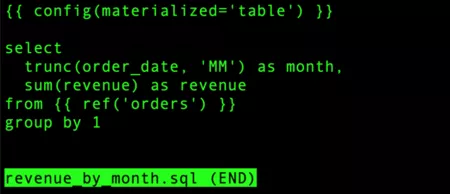

dbt is aimed at code-savvy BI developers who enjoy the practice of writing transformation rules in SQL as SELECT statements. Though a graphical interface for modelling data flows is not provided, this is often not disadvantageous: How easily logic can be formulated is shown below. Serving as an example is a rule which calculates monthly turnover and stores it in a materialized table titled "revenue_by_month":

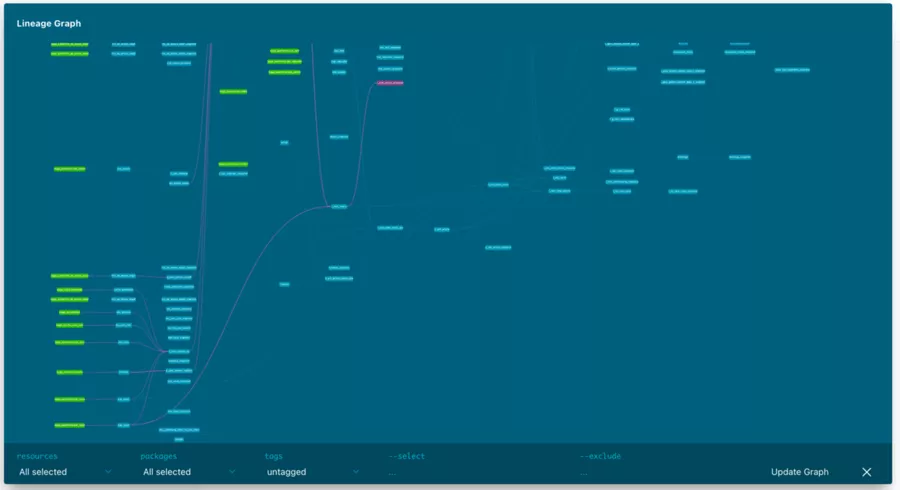

The ref notation in the SQL statement's "from" allows dbt to recognize the dependencies of each entity. The dependency graph (Direct Acyclic Graph = DAG) is then generated internally, and observed automatically in the execution sequence. dbt can also parallelize independently. The code is processed by a fully-fledged template processor (Jinja), so that macros can be used, for example.

Advantages of this code-oriented approach over a graphical ETL/ELT tool:

- In SQL, transformation logic can be formulated very concisely whilst saving time – and easily maintained later.

- Efficient, agile development: SQL statements can be tested directly during development, easily managed in versions, and merged during parallel development.

Disadvantage:

- You have to know (and like) SQL, but that's a typical MUST for data engineers anyway.

An affinity for scripting languages such as Python is also useful for writing auxiliary scripts (e.g. for loading from source systems to the database, the "EL"), if necessary. dbt itself is written in Python.

Even a great tool is just a tool

As with all BI tools - including graphic tools - the following applies:

Even with an efficient tool like dbt, a good internal structure is important to create a system which is robust, fault-tolerant, understandable as well as easy to maintain and expand.

BI experience is necessary here to later avoid an untenable "heap of spaghetti". dbt cannot enforce structure, nor is it intended to do so. It therefore provides full freedom for your own

- Naming and coding conventions

- Data architecture (layer concept, division of responsibilities, etc.)

Though dbt generates nice documentation (responding to clicks and zooms), only a good architecture results in a system which is easy to maintain in the long run.

Particularly good: The modelling technique (such as 3NF, star schemes, data vault) can be chosen in accordance with the use case. dbt gives freedom to decide whether the complete data model is first specified, or whether the modelling details emerge during development in a pragmatic and agile manner – if necessary, according to a rough target model.

We hope this article has acquainted you more closely with dbt in combination with a modern cloud database. Contact us if you would like to further talk shop about the advantages and disadvantages of dbt or Snowflake, or would like advice on rapid development of a data hub.