Physical organisation of historical tables

In this article, I propose a way for physical organization of historical tables, which makes it possible to effectively use partition pruning to optimize query performance. The way is specifically designed for data warehouses, therefore it presumes relatively complicated data loads yet productive selections.

I will use two metrics to evaluate the effectiveness of each described solution:

- Total Row Count (TRC)

- Rows per Single Read (RSR)

The Total Row Count determines the overall size of a table. The "Rows per Single Read" metric gives us an idea on how much data should be scanned to satisfy a typical query. The typical query against a historical table is, in my view, the one that returns values of historized attributes as of given date; it affects the significant part of key values which makes index access ineffective.

Does the change in the demography of data impact on the indicators?

It's obvious that effectiveness of physical design depends on data demographics. I will use a one case for an initial comparison, and later on we'll see how changes in data demographics can influence the metrics. The case is: a table that keeps the history of account balances in a bank. That data has following dimensions:

- Count of Accounts (CA): 1 000 000

- History Depth (HD): 5 years

- Frequency of Changes (FC): 5 changes per account per month

First possibility: the interval table

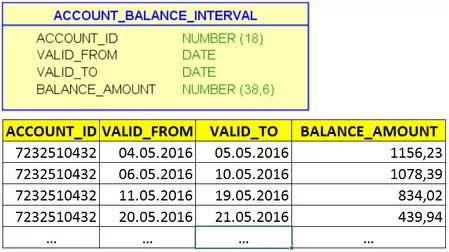

Firstly, let's recall traditional ways to keep historucal data. The most frequently used solution is an interval table. each row in the table has two dates which represent the vailidity onterval of a record.

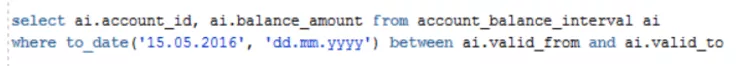

The balace for a given date could be selceted using query like this:

Based on this query, we can identify the main drawback of interval tables: there is no way to use date-partitioning, because VALID_FROM/VALID_TO of necessary rows can unconstrainedly differ from the given date.

The performance metrics could be calculated using following formulas:

TRC = CA * FC * HD * 12

RSR = TRC (because the whole table should be read)

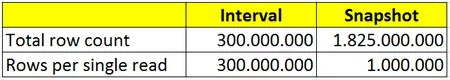

In summary, we have:

- Total row count = 300 000 000

- Rows per single read = 300 000 000

Second possibility: the snapshot table

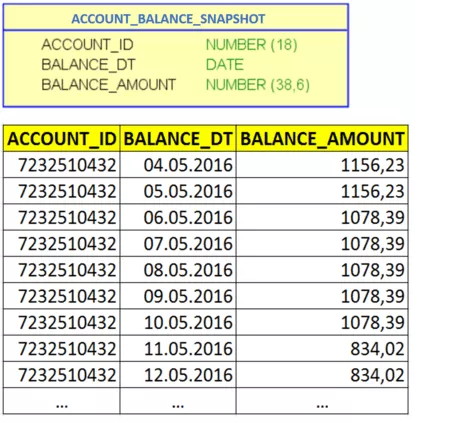

The second option is a snapshot table, which means saving balances for each account for each day.

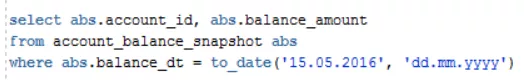

Doing this way means storing a lot of redundant informations, but it permits using with equity condition to select balances on a given date:

For this kind of queries, Oracle can easily use partition pruning if the base table is partitioned by BALANCE_DT. Therefore, we can calculate the performance metrics like so:

TRC = CA * HD * 365

RSR = CA (there is one record per account per partition)

That determines our data given below:

Each single read costs much less for a snapshot table than for nterval one, but this advantage is ruined by increasing overall table's bigness. The table of this size has less chances to be cached, requires more time for backup, restore and maintenance operations.