Why Serverless for Data Science?

Serverless as “Function as a Service” is the highest level of abstraction of the XaaS-family. It means that the user hands over all tasks related to the infrastructure to the cloud provider. That is, there are no worries about provisioning, maintaining or scaling the servers in the background.

The main advantages of this idea are that users of such services only pay if their functions are used (see below for details on the pricing model). If a piece of code is only needed sporadically, there are huge cost saving potentials compared to dedicated servers.

For data science, several use cases can benefit from a serverless approach. Generally speaking, serverless shines with on-demand services. These can be file-triggered processing pipelines or API-triggered prediction requests. In the example below, I demonstrate this approach with an API-triggered demand to analyze social media for a particular search term.

All cloud providers have implemented a way to use FaaS in their ecosystem. I use AWS and its Lambda function for this example, but that does not mean that it the best choice for every use case.

AWS Lambda as the central Building Block

AWS introduced Lambda in 2014 and extended its functionalities over time. While I am writing this post, it supports Node.js, Python, Java, Ruby, Go and C#. At its core, Lambda functions consist of two parts:

- The function to call.

- Additional layers that can be used to add other functionalities, such as additional Python packages.

There are three cost drivers for Lambda functions:

- The number of executions per month.

- The memory allocated for a function.

- The execution time in milliseconds.

For instance, it costs only $2.51 to execute a function 1,000 times a day, which uses 512 MB of memory and takes about 10 seconds to run. The execution time is also the most important driver of costs. That is why Lambda functions shine when used for small and fast pieces of code. High numbers of requests are no problem, but the execution can become relatively expensive fast. To calculate costs for your use case, see this calculator by AWS for details.

There is a long list of AWS services that can trigger lambda functions and receive their results. For data science use cases, there are two main triggers. First, there are triggers related to file storage (S3 on AWS). These triggers fire if a new file, either data or configuration for a process, are uploaded to S3 and initiate the next step in a chain of functions. Second, there are many different ways to implement an AWS API gateway. In this case, we are in the realm of on-demand analytics.

The list of services to receive the results are similar. First, Lambda functions can write their results to dedicated S3-buckets from where another service can use them. Second, Lambda functions can push their output via the AWS API gateway. Third, it is easy to implement notification services about the results via AWS SNS / SES.

The choice of triggers and receivers is not an either-or-question. Instead, Lambda functions can be started by different triggers and send their result to multiple receivers. However, the simplicity of adding additional dependencies should not lead to a mindless increase in complexity.

Example – Sentiment Analysis of Tweets

Let’s have a look at a concrete example of how to use a Lambda function for data science purposes. I chose an example from the realm of on-demand-analytics, but there are many more potential uses for such an approach.

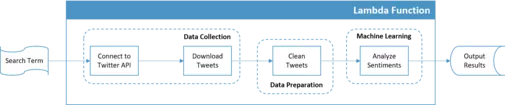

This example will do four things:

- It will receive a search term as a key-value pair, which is what an API gateway would send in an implementation.

- It will use this search term to query the twitter API for tweets with this search term.

- It will evaluate the sentiments of the collected tweets.

- It will return key-value pairs with the proportion of positive, neutral and negative tweets for the given search term. Again, this would be the result an API gateway would expect for further use.

Figure 1: tasks provided by the examplary Lambda function

To implement this Lamda function, we need to carry out three steps:

- Write the Python code.

- Build a customized layer with the necessary packages.

- Test the lambda function with various search terms to evaluate functionality as well as runtime.

The third step will enable us to estimate the final costs of running this function as a part of our fictional on-demand analytics platform.

Implementation

The lambda function that takes care of the four steps outlined above looks like this:

https://gist.github.com/timo-boehm/598e9f7ae5231f889a197af96be48787

import re

import tweepy

from textblob import TextBlob

def lambda_function(search_term):

# Details for connection

auth = tweepy.OAuthHandler(consumer_key="XXXX",

consumer_secret="XXXX")

auth.set_access_token(key="XXXX",

secret="XXXX")

# Connect to API and collect results

api = tweepy.API(auth)

api_output = api.search(q=search_term, count=500)

# Prepare tweets for analysis

def clean_tweet(tweet):

return re.sub("(@[\w]*|(https:[\S]*)|([,.;\\n'\"()]))", "", tweet).strip()

tweet_list = [t.text for t in api_output]

clean_tweets = [clean_tweet(t) for t in tweet_list]

unique_tweets = set(clean_tweets)

# Calculate sentiments for all tweets

sentiments = [TextBlob(t).sentiment.polarity for t in unique_tweets]

# Aggregate sentiments in one dictionary

aggregated_sentiments = {"positive": len([s for s in sentiments if s > 0]) / len(sentiments),

"neutral": len([s for s in sentiments if s == 0]) / len(sentiments),

"negative": len([s for s in sentiments if s < 0]) / len(sentiments)}

return aggregated_sentimentsFor the sake of simplicity, I hard-coded the necessary keys into the function, which should obviously be handled differently in an actual use case! You can also see that I tried to keep the packages required to a minimum. The reason for that lies in the second part of a Lambda function: layers.

There are several ways to upload a layer to AWS. For a start, the easiest way to do this is to upload a zip file with the correct folder structure: \python\lib\pythonX.X\site-packages\. The critical part here is to include only the relevant packages and their dependencies. The larger the zip-file, the less performant the lambda function will be since all of them get loaded at the beginning.

With the necessary pieces in place, we can evaluate our example. After some experimentations, the average runtime seems to be about 2400ms or 2.5 seconds. Using the calculator mentioned above, I could serve 10,000 API calls each day for less than $3 a month! In other words: an unbeatable cost-benefit-ratio.

Serverless Potential in Data Science

Lambda functions (and its cousins on other cloud platforms) are not suitable for all data science applications. Besides, the simplicity of setting them up is beautiful and dangerous at the same time. Beautiful because Lambda functions enable rapid experimentation. Dangerous, because they invite quick fixes that introduce technical debt and lead to potentially unaccounted problems with quality. Therefore, you have to make sure that their use is clearly defined, especially when it comes to the operations a Lambda function can access.

On the other hand, they also have tremendous potential. For instance, trained machine learning models can be stored in S3 buckets and used by Lambda functions for inference. In combination with other AWS services, such as step functions, they can be integrated smoothly into data processing pipelines that include smaller pieces of machine learning code.

As with many other terms and concepts, serverless is not the solution for everything. Nevertheless, it is a powerful tool to add to a data science mindset.