New data sources and areas of application emerging constantly continue to drive the steady expansion of data storage systems such as DWH, Data Lake and Analytics Platform. Data management processes must also keep pace with growing requirements. Not seldom do small BI applications grow into major initiatives in which several development teams participate. The situation is exacerbated in many industries by the need to make adjustments faster than ever before. This demands short reaction times and high flexibility from the teams and, not least of all, from the infrastructure.

Continous integration to meet increasing demands on software development

To cope with rising demands on the size and number of development teams, as well as the accompanying, parallel development processes in a system, principles such as continuous integration (CI) established themselves long ago in traditional software development.s CI aims to ensure that code and other development objects are regularly integrated and tested using automatic jobs. Changes here are integrated into a central repository under version control. Committing these changes starts automatic builds which include various tests and consistency checks. If any test fails, the developer is informed automatically.

For handling database systems as backends of applications, further steps such as database updates and tests can be incorporated into the build process.[1] Because design and advancement of data warehouse systems (DWS) place a focus on development of database systems, the special features of CI are to be described with regard to database development versus application development. For this, the basic functionality of CI is first described, and general key practices are presented. Based on the key practices, the main differences are then shown, and solutions are proposed.

Overview of continuous integration

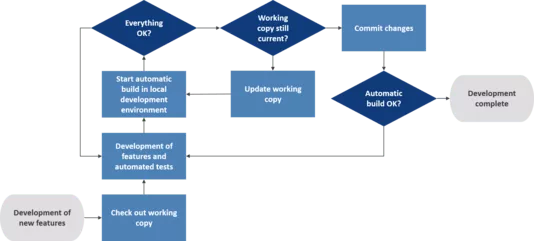

Continuous integration requires no use either of a particular tool set, or specific, individual tools. Proposed within the framework of CI are solely practices which allow elimination of many problems related to parallel development in the same system. Most suitable for an introduction to this subject is the workflow of development in a CI environment:

Figure 1 – Workflow for developers in a CI environment

The workflow in Figure 1 represents development of a feature in a simplified development environment with a central master or trunk and a version control system (VCS) in which continuous integration takes place with the help of commit operations. As shown in Figure 1, development of a new feature begins with the developer creating or updating their working copy of the code base. After that, a new feature and a related test can be developed. The test helps ensure compliance with specifications during development, and can be used further as a regression test after development. Once the entire code base has been successfully rolled out with the new development into the local environment, and tests concerning the modified objects have proven successful, the code base should be reviewed for possible changes, and updated if required, to anticipate potential conflicts in merging. Finally, the changes are integrated into the common code base in the VCS. This initiates execution of an automatic build including automatic tests. This step is crucial, because the test environments set up for it resemble the production configuration more closely than would be possible with local development alone. If all test results here are also OK, the developer's work is complete. Otherwise the remaining errors are eliminated and the CI cycle is repeated.

Key practices for effective continuous integration

To allow such an approach in conjunction with different test instances during development, certain prerequisites must be met. Already in 2006, Martin Fowler described a number of key practices (KP) which should be observed for effective continuous integration: [2]

KP 1 |

|

KP 2 |

|

KP 3 |

|

KP 4 |

|

KP 5 |

|

KP 6 |

|

KP 7 |

|

KP 8 |

|

KP 9 |

|

KP 10 |

|

KP 11 |

|

table 1 – Own representation of CI key practices according to Martin Fowler (2006)

For DWS, the presented key practices have different consequences, as is the case when creating software. However, many principles can be applied on an almost 1:1 basis. In the case of other key practices, special circumstances must be considered or compromises must be found. The major challenges are dealt with in more detail next:

Challenges in using CI during database development

Full deployment vs. delta deployment

While code can be overwritten entirely during release of a software application (full deployment), it is necessary to bear in mind, when working with database systems, that renewed deployment of objects usually causes a loss of the persistent data therein. Database systems are also used in traditional software development, but mostly just as a backend for a software or web application. The database system is optimized in such cases for smaller transactions, changes in the data model being rare and usually purely additive. Furthermore, a smaller number of developers usually work on the database system. Most of the changes can be created using simple scripts or DB Diffs, special attention to data being required only in individual cases. In DWS, however, a large portion of the work must be dedicated to the database, with structural changes, data migrations, archivings and updates forming part of daily activities. Changes here can therefore not be created and deployed simply via DB Diffs. To be made available for a release is a specific transition / upgrade script which takes into account data changes (DML) in addition to structural changes (DDL). Here it is essential to ensure that data are not lost or falsified. An additional challenge is posed by the large amounts of data (compared to transactional systems). To mitigate the consequential rise in complexity, various tools such as Liquibase and Flyway have been established. An overview of possible tools is provided here.

Exclusive creation of upgrade scripts can lead to problems, however. If the totality of structural changes and the configuration files of all upgrade scripts are always implemented sequentially in order to deploy the current development stage in an empty environment, this can quickly lead to bottlenecks, thus breaching key practice 7. Furthermore, the code alone does not make it easy to determine which objects exist in which form in the current development stage, because they are distributed in different upgrade scripts. These are just a few of the reasons for generating and maintaining, wherever possible, a snapshot of the current development stage in addition to the upgrade scripts. Caution, however: If two code artefacts are maintained, it is necessary to ensure (e.g. through a use of automatic DB Diffs) that they do not drift apart.

Dependencies within a build

Existent within a database are dependencies between objects which cannot always be resolved easily. For example, a view can access a function, but also vice versa. Challenges can arise especially if snapshots are used for the reasons previously mentioned, and the latest development stage is to be rolled out into an empty environment. In traditional software development, the standard procedure at this point would be to analyze the system and its loaders, this ultimately being similar to deployment of object categories. Due to the circumstances described above, however, it is not sufficient to simply deploy object categories building on each other in DWS (first all tables, then all views, and so on). Probably the most simple, but not very elegant solution would be to accept the dependencies and manage them to the greatest possible extent with coding standards and conventions. A more elegant but perhaps costly solution would be to analyze the dependencies before deployment and create an optimal flow path.

Heterogenous production environment

Using CI, many problems can be detected and fixed early by automating and testing the individual steps right up to the productive environment. In software development, the CI environment usually reflects the production environment quite accurately. However, the practicality of this for database development is limited for the following reasons:

- Gigantic storage space might be required for a full copy of the production environment.

- Cloning (or importing a backup) of the production environment can take a great deal of time.

- Due to the GDPR, data can usually not be used on a 1:1 basis for testing purposes.

- For financial reasons, multiple instances of hardware and licenses cannot always be made available.

The balancing act at this point is to ensure the most realistic possible test environment (see key practice 8) and simultaneously a fast build (see key practice 7) despite the limitations described. The mentioned challenges can only be met with compromises, especially for large production environments in DWS. Accordingly, an environment designed specifically for tests geared toward production can be loaded with an older, anonymized data version at regular intervals and used jointly by all developers (pre-production). However, developers must perform very precise coordination here in order to optimize use of resources and avoid conflicts. Due to the longer period until the environment is updated, developers should keep the environment "tidy" and handle the data prudently.

Automated tests

Various restrictions are imposed on DWS, in contrast to application development where individual units can be tested in considerable isolation, and interfaces can be simulated simply. Because data are actually copied with the help of management processes in the database for meaningful tests, individual tests cannot be parallelized readily. Otherwise any test could be overwritten / manipulated through parallel invocation of another test's source data before its conclusion. In addition to parallelism of tests within a build, the same reasons also prevent concurrent starting of multiple builds with tests if they are deployed for the same environment. In addition, sequential execution of individual tests also means a prolonged running period. It is true that the running period can be shortened by generating procedure scripts from the dependencies for optimal sequencing during test execution (first Test1, then Test2 and Test3 parallel, then Test4, etc.). However, the build can only be accelerated to a certain degree here, and full coverage is only possible conditionally, because not all dependencies can readily be read out automatically. For these reasons, all tests cannot be readily executed with each build. A more elaborate solution would be to perform only those tests for which objects have actually changed. Due to dynamic SQL alone, however, full coverage would be difficult to realize here, so that compromises must be reached again in this case. For example, nightly builds running at fixed times can be configured for automated tests. Furthermore, tests can also be separated so that lightweight unit tests are executed with each build, for example, while more extensive and time-consuming tests are assigned to nightly builds.

Conclusion & outlook

Using CI, the quality of (software) increments can be increased significantly before delivery to the production environment. In addition to the possibility of regenerating the local development environment at any time and detecting conflicts at an early stage, the CI environment also offers added educational value: Through short feedback loops, the developer is automatically taught to work in a more orderly manner and more prudently, so that the automatic build results contain "red lights" less frequently.

Most of the key practices can be adopted from traditional software development and their benefits are directly available. By contrast, other key practices are influenced by natural differences between software and DWS development, and cannot be applied on a 1:1 basis. A significant difference here is that most errors in DWS cannot be found until import into a test environment and start of data management processes (run-time), whereas during traditional software development, more extensive testing of the code is possible already at compile-time.

Various trade-offs between test coverage, benefits, operating times and costs make it possible to deal with the specific characteristics of databases and reap the benefits of a CI landscape.

Sources

[1] cf. Glover, Andrew et al.: Continuous Integration: Improving Software Quality and Reducing Risk, Https://www.safaribooksonline.com/library/view/continuous-integration-improving/9780321336385/#toc, 2007.

[2] cf. Fowler, Martin: Continuous Integration. https://www.martinfowler.com/articles/continuousIntegration.html, 2016.