The good performance of a HANA database stems from the systematic orientation to an in-memory database, as well as using modern compression and Columnstore algorithms. This means that the database has to read comparatively less data when calculating aggregations for large quantities of data and can also perform this task exceptionally quickly even in the central memory.

However, one of these benefits may very quickly be rendered moot if the design of the data model is below par. As such, major benefits in terms of runtime and agility may become null and void for both the HANA database, as well as the users.

The Columnstore Index

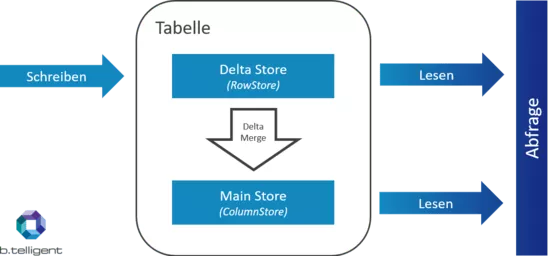

How data is stored in the HANA database in two steps:

First of all, the data is written in the delta store (row-based) and then transferred into the main store during the delta merge. Other manufacturers also use this process. A crucial factor in this process is that compressed Columnstore index writing operations are not directly permitted. This is due to the fact that they are compressed on the one hand, but on the other hand the data has to be transferred into the column-based format in a "complex" manner.

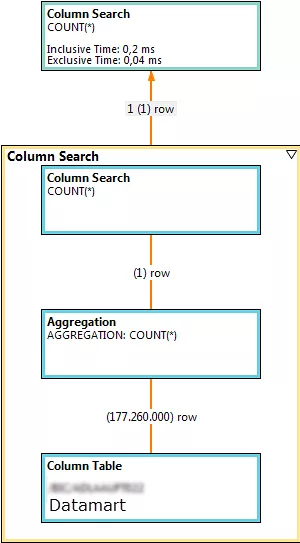

Columnstore is then particularly advantageous if fewer columns will be read, but a lot of rows will be totalled (for BI applications, this is not uncommon in comparison to instances where ERP applications are used). It significantly minimises access to the storage medium, as the data is already organised into columns.

In contrast, a classic Rowstore always has to read the entire length of all the rows concerned. This is how a large overhead arises when calculating aggregates. It can thus be noted that a Columnstore-index is extremely fast for totalling a lot of data, as the information required is set aside before aggregates are calculated.

The HANA Join Engine

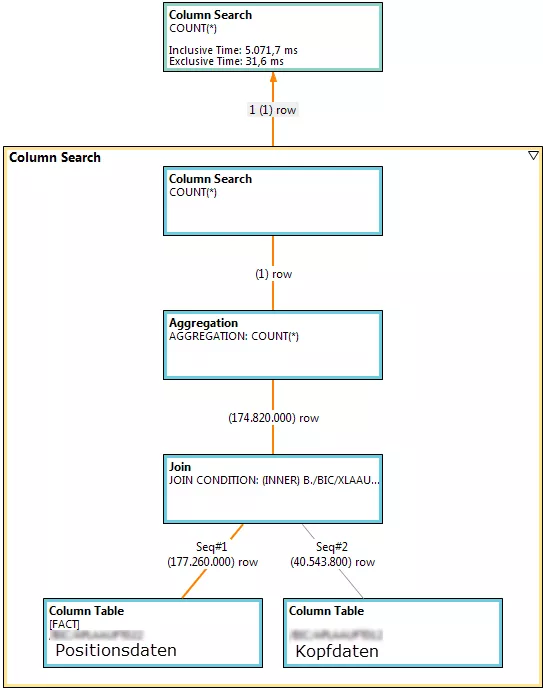

The HANA database also has to perform less work if I display less data, yet aggregate a lot of data. However, what happens if I join two tables with a granular column?

It is clear to see that both tables are read at the level of the Join criterion before aggregation takes place. This results in the fact that the HANA database has to read much more data from the central memory whilst simultaneously processing this "on the fly". As such, the advantage gained from a Columnstore is negated by the data model and the runtime considerably increases in comparison to a persistent data mart. It is essential to take both the flexibility and performance of the model into consideration when modelling data.

Here's a straightforward example:

A CompositeProvider joins two ADSOs with an order header on the one side, and order item data on the other, in order to make these available together in Reporting. We have 40,000,000 header data and 180,000,000 items. Implementing (and navigating) each query takes 5 seconds (this is extremely fast considering the quantity of data, because HANA reads it from the central memory). We now try the Join in another ADSO so that the Join Engine is no longer in use. The query now requires a runtime of under 1 second. There's a trivial reason behind it: the HANA database simply reads less but produces the same results.

There are also other instances where the demonstrated performance has an impact:

One example is the distinct count (exception aggregation: counters for detailed values that are not null, that are null or that contain errors). The query reads the SIDs from the InfoObject in order to determine the key figures. This happens via a Join. If the InfoObject is very large, this can have an impact on the runtime of the query when the key figure is displayed. One option to optimise the process is to transfer the SIDs in the Datastore, whereby a Join is suppressed.

There will be more information on this topic in a subsequent article discussing how to optimise distinct count key figures.