With the launch of SAP BW on HANA in 2010, many previous measures for enhancing performance in BW systems became obsolete. At the same time, however, many new questions are cropping up regarding the novel platform. Here, one very relevant question is whether it still makes sense to cache the Advanced Business Application Programming routines. For with HANA, the data is, on the one hand, stored in the database located under an application server in the main memory and, on the other hand, optimised for requests. In addition, the requests in routines are executed systemically on the application server. Therefore, the question regarding the sensibleness of the use of a cache for ABAP routine requests is to be commented on in detail in the following blog contribution:

In the event of frequently recurring data, the answer is yes. If, for example, the attribute "continent" is to be read by the information object "country", the temporal overhead of access by the SQL parser, the network, etc. to HANA is recurringly too high for each line. There are several technical layers between the ABAP program and the actual data, which are to be executed repeatedly. However, if it is necessary to perform several joins between tables or if the number of lines to be read is very large, the advantage tilts towards the HANA database again.

According to my experience with customers with large data quantities, a cache in ABAP partially triples the speed of the DTP execution in a SAP BW on HANA system. Of course, this always depends on the situation (e.g. data distribution, homogeneity of the data, etc.), as well as the infrastructure that has been built up. All still without use of the shared memory. For all data packages together, i.e. per load, the shared memory performs only one request of the database. In handling, however, it is unnecessarily complicated.

Frequently used cache implementations in ABAP

Full Table

In an internal table, the entire or necessary part of a database table is loaded and is available during the DTP execution. The database is no longer accessed per lookup. However, it should be noted that a SORTED or HASHED table is used in order to ensure the best-possible performance.

Code:

SELECT * FROM ZTABLE INTO lt_cache. LOOP AT result_package ASSIGNING <RESULT_FIELDS>. READ TABLE lt_cache WITH TABLE KEY schluessel = <RESULT_ FIELDS >-schluessel INTO ls_cache BINARY SEARCH. MOVE-CORRESPONDING ls_cache INTO <RESULT_FIELDS>. ENDLOOP.

Stack

If dynamic calculations or lookups that are repeated in the event of a certain number of datasets are performed, it makes sense not to execute them several times. The result is stored in a KEY à VALUE model, in our case a SORTED table. In the DTP execution, it can be ensured by means of the changed database structure of the HANA database as well as the semantic partitioning of a DTP that datasets of the same type are processed within one package. This process optimises the stack cache enormously without the use of the shared memory.

Code:

LOOP AT result_package ASSIGNING <RESULT_ FIELDS >.

READ lt_cache INTO ls_cache WITH KEY schluessel = <RESULT_FIELDS>-schluessel BINARY SEARCH.

IF sy-subrc <> 0.

SELECT * FROM ZTABLE INTO ls_cache WHERE schluessel = <RESULT_ FIELDS >-schluessel.

INSERT ls_cache INTO lt_cache.

ENDIF.

MOVE-CORRESPONDING ls_cache INTO <RESULT_ FIELDS >.

END LOOP.

Last Used

The simplest form of caching can be well used with semantic partitioning. The latter delivers the marked information objects in a sorted fashion. Thus, by means of a simple structure and a case distinction, it can be checked whether the structure must be reloaded. As an example, the order items after the orders can be requested on a semantically partitioned basis. Thus, the orders are also delivered as sorted in the start and end routines. In ABAP, it can now simply be checked whether the new line still contains the same order and whether the structure needs to be updated. Thus, the number of SQL request can be reduced simply but heavily, according to the size of the grouping.

Code:

LOOP AT result_package ASSIGNING <RESULT_ FIELDS >.

IF ls_cache- schluessel <> <RESULT_ FIELDS >-schluessel.

SELECT * FROM ZTABLE INTO ls_cache WHERE schluessel = <RESULT_ FIELDS >- schluessel.

ENDIF.

MOVE-CORRESPONDING ls_cache INTO <RESULT_ FIELDS >

END LOOP.

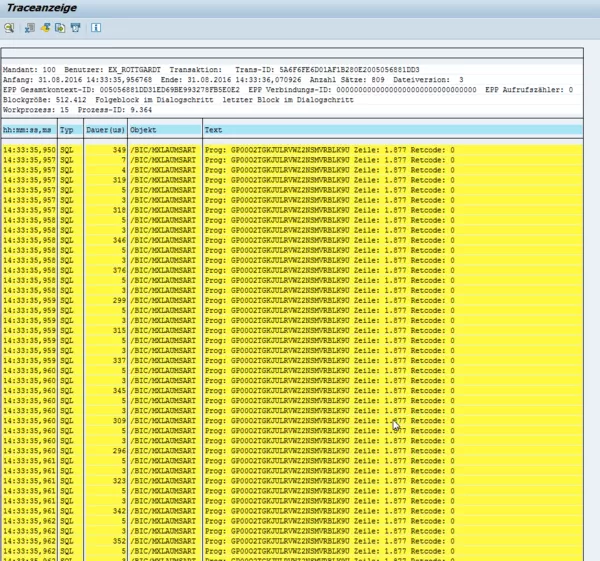

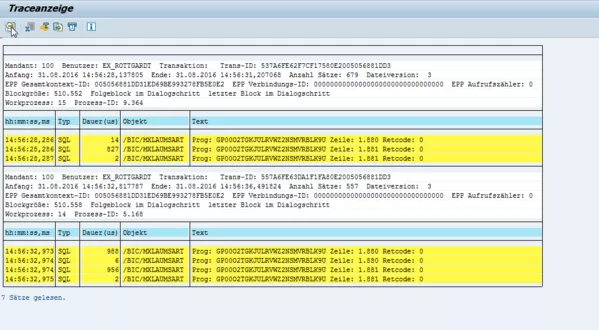

Comparison of execution with and without cache

In order to illustrate how the processing times differ depending on whether a cache is used or not, in the following section an execution with different cache modellings is to be performed and compared. Each execution takes place with more than 17,516,672 datasets. The number of SQL statements offset against the HANA DB has a package size of 200,000 datasets.

To highlight the differences in the processing times, various possible implementations were compared. To determine a starting value, first of all the load without derivations (simple transformation with direct allocation) was executed with and without HANA optimisation. Afterwards, a reading with an SQL request per data line and once with a single "Full Table" cache per package was considered (i.e. without shared memory).

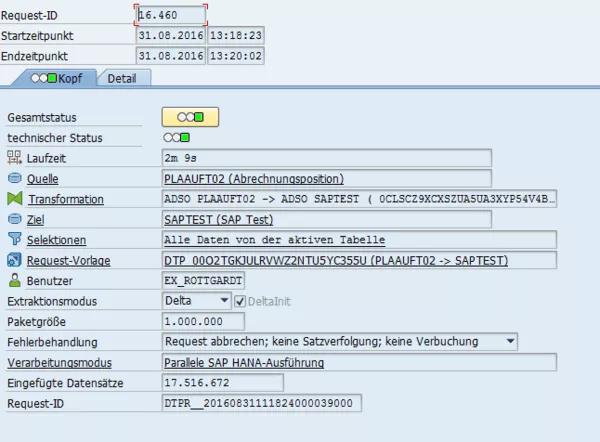

The SAP HANA-optimised execution with pushdown requires 2 minutes and 9 seconds.

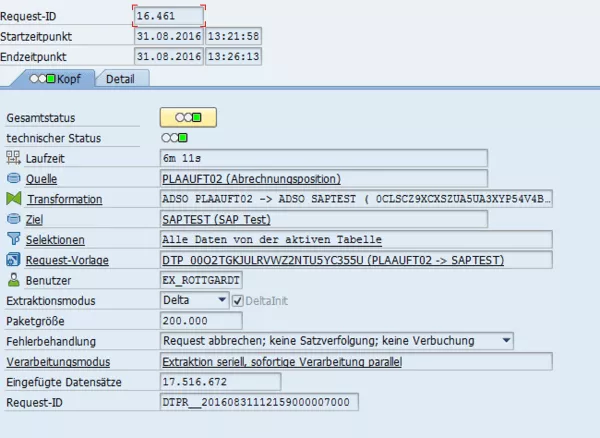

Execution without SAP HANA optimisation requires as much as three times as long a processing time, 6 minutes and 11 seconds exactly.

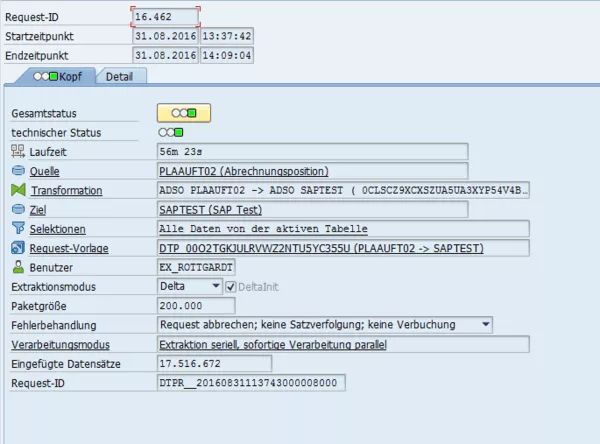

Execution with one SQL request per data line (SELECT SINGLE) leads to the processing time of the DTP being multiplied by almost ten.

Result

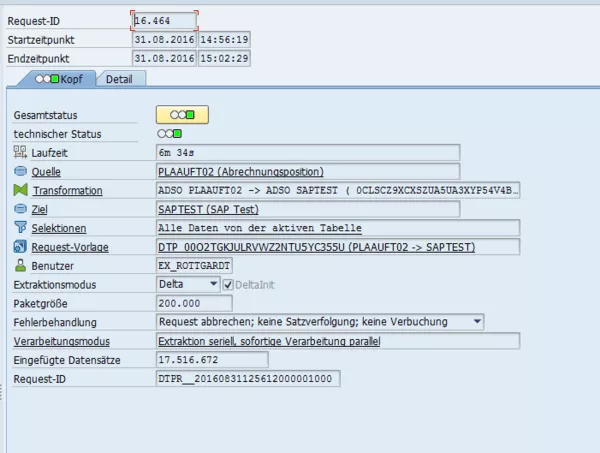

Now to the exciting result of the investigation: use with a "Full Table" cache instead of the multiple executions of an SQL statement is much more effective. That is, only one SQL request was required per package (with a size of 200,000 lines) to fill an internal table. The processing time is marginally higher than in the execution without HANA optimisation. Now, why is it that despite the HANA in-memory processing, there is such a major discrepancy?

If we compare the accesses to the M-table, from which we read data, it is clearly seen that each request possesses a certain basic processing time. In the test case, this is approximately 300ms. Although this initially sounds like very little, this very fact is one of the crucial factors in the millions of executions. This can be accounted for with the normal latencies, such as network, ABAP Open SQL abstraction, etc.

The simplified processing time calculation then looks as follows:

17,516,672 * 300ms / triple parallel execution = 29.19 minutes overhead for the reading, which approximately corresponds to the measured processing time increase.

If one looks at the second example with cache, it is immediately visible that, at 800ms, the request duration is slightly higher, but that execution takes place only on a package-by-package basis, i.e. on 200,000 lines at a time. The processing in the internal table is then extremely quick again and becomes only marginally apparent.

Conclusion

It is thus clear that it continues to make sense to use a cache in ABAP. However, it is advantageous that more complex JOINs with more stages or other logics that used to take longer to process in the database can now simply be mapped in the SQL. In the event of recurring requests of the same type, however, an organisational overhead develops that does become noticeable overall.From version 7.5 SP4 onwards, the number of technical possibilities increases further. For example, the combined use of HANA-optimised execution and ABAP execution is possible when the two transformations are connected by one InfoSource (partial SAP HANA execution). You can find extensive details on the innovations here.

In summary, it is recognised that the optimised execution of derivations and rules must continue to be considered and the right place for the execution of the code must be sought, even if HANA is used. With the ever deeper integration of HANA into SAP BW with each support package, SAP will create further exciting solutions, about which we will inform you in this blog.

Summary of the processing times

Additional information for interested parties"Full Table" cache example codeAlso enclosed is a code example for a "Full Table" cache in simplified form. In general, it is recommended to implement lookups in classes, as these can also simply be performed directly for test cases, extensions are easier to implement and it is easy to use a shared memory. Should you have any questions, feel free to approach me."Full Table" cache example of a formula routine: Global declaration "Full Table" cache example of a formula routine: code to be executed.