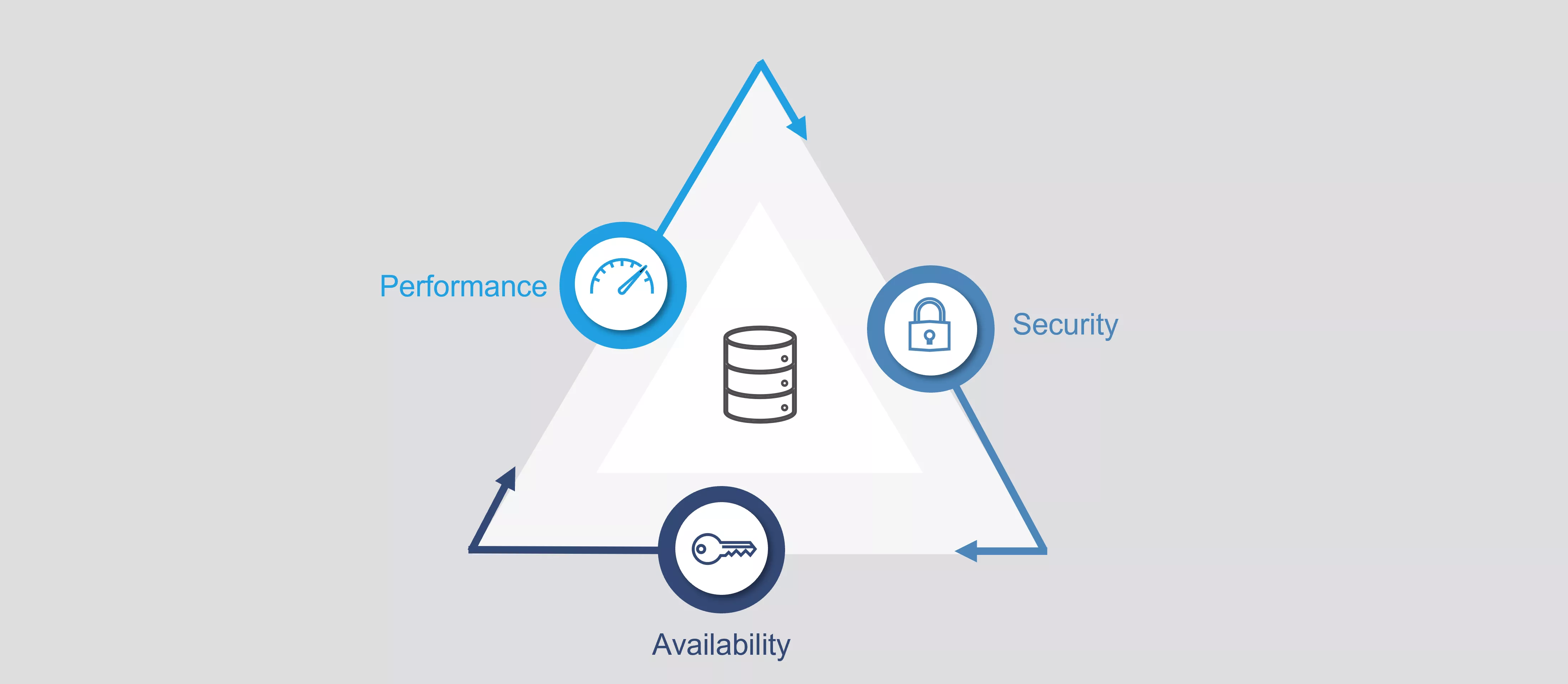

Modern data platforms must excel primarily in terms of data availability and security, as well as data processing speed. In this article, we describe how such a solution can appear with the technologies comprising Exasol and Protegrity, if efficient data evaluation and security can be realized "out of the box" in the context of PCI DSS (payment card industry data security standard) and GDPR. Why not build such security solutions yourself, i.e. what does it take to integrate data security, in particular, into a data platform?

The three associated pillars briefly introduced:

Availability

Data availability means that a data platform must be built such that the data are easily available via simple services to employees, partners, and possibly also clients and processes. This means that in addition to high safety against failure, data should be easily accessible by employees via SQL or a reporting tool, for example. The following features should be emphasized:

Access for all departments via SQL, Python, Excel or a reporting tool (Power BI, Tableau etc.)

Structured storage in "simple" relational data models, ideally in a dimensional model

Finally, high transparency in terms of how data have been prepared (e.g. through virtualization / encapsulation of business logic in views)

This means that availability, in particular, places high demands on performance and security: Users want rapid responses – and for reasons of security, data must be protected differently according to user groups.

Performance

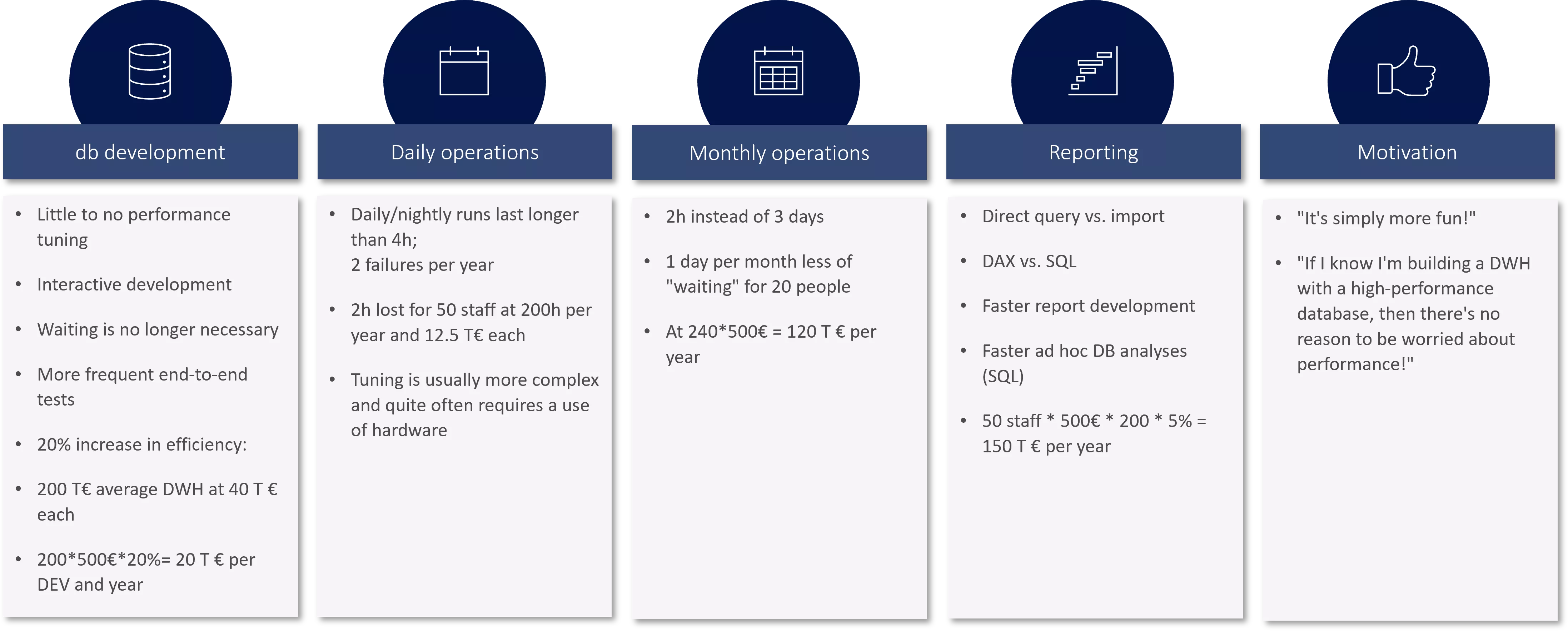

We consider high performance during queries and processing to be important to a data platform's business case:

Though speed is not an end in itself, we consider it to significantly impact the efficiency of knowledge workers who have to analyze data from data platforms on a daily basis. Here it is also very easy to calculate business cases for investments and operations related to data platforms.

A few sample calculations will illustrate this. Though the assumptions here are moderate, economies of scale (high number of affected employees) from the start lead to savings which should compensate the costs of a data platform.

- Running and measurement of the outlined scenarios in more detail would certainly reveal significantly higher efficiency gains.

Security

As a final pillar, security of our data is a specification with a rather "binary" outcome. Binary because any lack of data security in terms of compliance with GDPR/PCI can lead to shutdown or at least massive restriction of the platform's usage. Requirements here arise automatically from a desire to make data available for everyone (persons and processes):

Data requiring protection are needed for customer-centric analyses and processes.

Data must be securely loaded into the data platform, stored there and kept ready for queries.

Ideally, this type of security is based on a central solution instead of an isolated one.

Any fundamental/initial renunciation of PII data usually results in a need for establishing and using special techniques which ultimately raise costs. Examples of special techniques are duplication of tables and schemas for different user groups, or "manual" implementation of selective de-/encryption logics to protect access paths, which leads to more complex ETL processes and authorization concepts.

The challenge here is that standardized/fundamental solutions are very rarely implemented at present, especially for complex system landscapes such as a data platform. Instead, a variety of components are often handled and secured separately, which raises expenditure in terms of implementation and governance.

A detailed consideration of these three pillars soon reveals a need for balancing conflicts arising between the three associated goals:

Absolute security of data is possible only if these are not loaded into the data platform. However, that contravenes availability.

High performance during queries can be achieved either by highly condensing data or using only data with very specific processing requirements in order to reduce the amount of data to be processed. However, this also contravenes the requirement for data availability.

Very complex protection mechanisms lead to complex processing, lack of availability and consequential limits on data processing performance.

Availability of data for everyone potentially violates the need-to-know principle concerning data protection and causes bottlenecks in data processing performance.

Conclusion and outlook

All three pillars are therefore of equally high priority when fulfilling requirements for a data platform.

Availability for reliable and rapid responses to analytical queries.

Performance because it ultimately determines the platform's operating efficiency.

Security because a failure to guarantee it can completely or partly disrupt the platform's operation.

This often needs to be reconciled with feasibility. The greater the number of mechanisms and transformations needing to be implemented to ensure proper security, the longer it takes to provide and query data. In this case, performance can only be salvaged by dispensing with parts of data (at least the PII data). Like so many other aspects of BI, this apparent dilemma can be resolved through a dexterous use of the right technology.

In the second part of this blog series, we take a look at a solution making use of the technologies comprising Exasol and Protegrity, as well as the distinguishing features of this combination.

To part 2