When we do simple descriptive data exploration, we are seldom content with analyzing mean values only. More often, we take a more detailed look at the distribution, have a look at histograms, quantiles, and the like. Mean values alone often lead to erroneous conclusions, and keep important information hidden. But if this is the case, why do we forget about this as soon as we build predictive models? These usually aim only at mean values - and they lie.

Applications in logistics: quantile regression is a standard feature in Amazon Forecast

There are use-cases in which quantile predictions are inevitable. Logistics forecasting is an area

where this is often the case. Cutting edge players like Amazon react to this demand with products like Amazon Forecast, where prediction of arbitrary quantiles is one of the core features.

Unfortunately, quantile regression is hard. The rest of this blog will show why it's hard and explain how the difficulty can be solved to produce quantile predictions with gradient boosted tree models. For tabular data, Gradient boosted trees are still state of the art, despite frequent claims that deep learning has finally managed to catch up. I'll be among the first to celebrate the new possibilities when it’s actually ready, because I already like to play with deep learning for tabular data. But we're not there yet.

The most well-known implementation of gradient boosted trees is probably XGBoost, followed by LightGBM and CatBoost. XGBoost is known for its flexibility and wealth of options, and quantile regression has been requested as a feature already in 2016. Unfortunately, it hasn't been implemented so far.

Quantile regression in XGBoost: isn't there an easy way?

When you've worked with XGBoost in some depth, you might ask yourself why we can't just do quantile regression by employing a custom loss function. In fact, quantile regression is well-known to correspond to this simple loss function for any quantile q between 0 and 1:

l (x) = x (q - 1 {x<0} )

To use it as a custom loss function in XGBoost, we have to provide the first and second derivative as well. And that's where the problems begin. First of all, this loss function is not differentiable at 0, so the derivates don't exist everywhere. And second, the second derivative is constant and equal to zero wherever it exists. The second point is especially problematic, because the second-order optimization algorithm that makes XGBoost and its peers in the current generation of implementations so fast can't derive any orientation from an all-zero second derivative. It will perform badly in such a situation. So, unfortunately, it's a bit more difficult than just using a custom objective function.

Huber loss: If your loss isn't nice, you have to Huberize

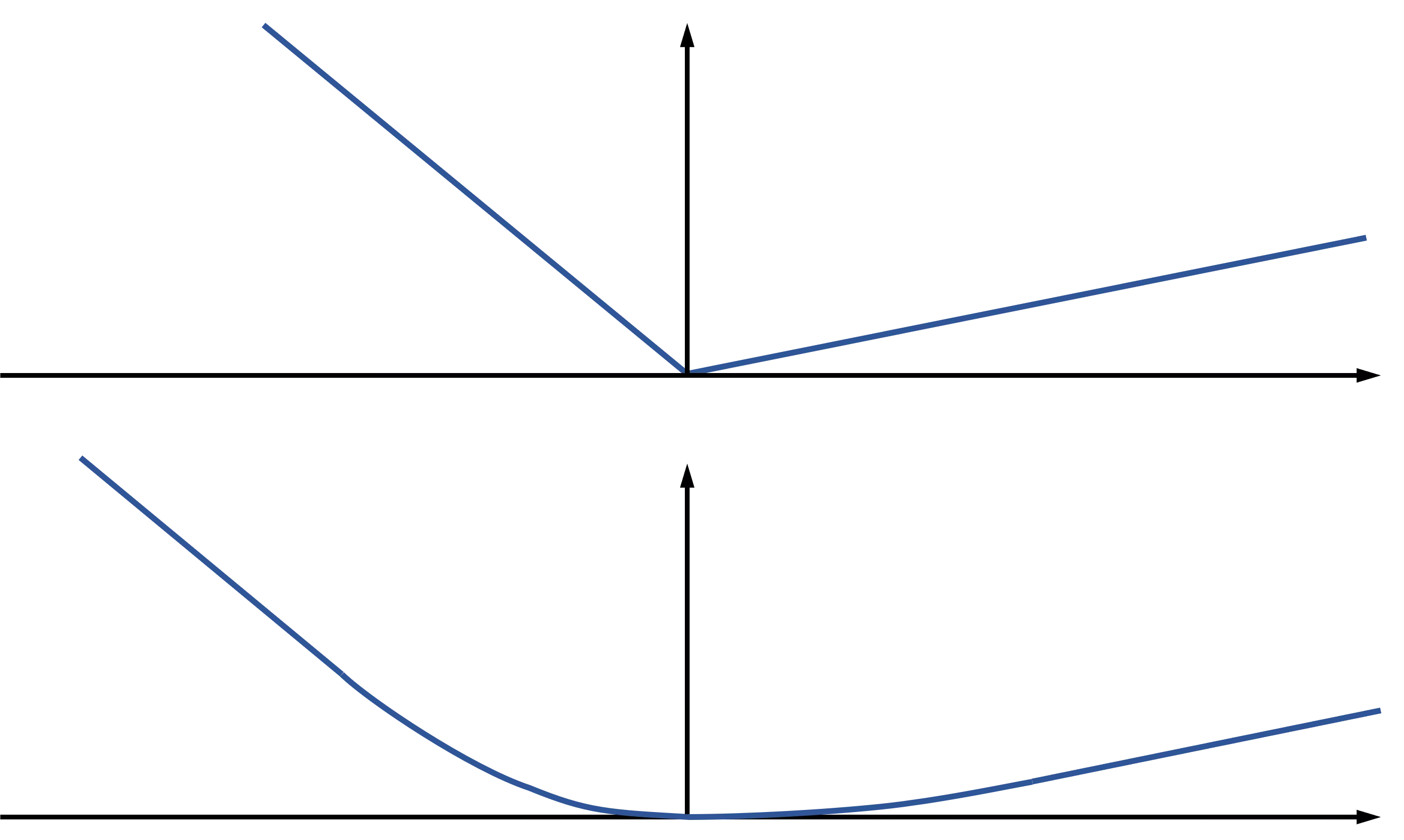

There is a standard remedy for some of the nastiness of the quantile loss: You can Huberize it, i.e., replace the of the function near 0 with a smoothed version (a quadratic function). Figure 1 shows the difference between the standard quantile loss and the Huber quantile loss, with an example of standard quantile loss in the upper part of the figure, and the Huberized version below. This solves the first problem, but unfortunately not the second, bigger problem: The second derivative is still not very helpful, as it is still zero apart from a small region around 0. Hence, the Huber quantile loss won't solve our problem. It is still helpful in many other situations, and if you want to learn more about Huber loss for quantile regression, I recommend the paper by Aravkin et al.

How to solve an unsolvable problem

Okay, so maybe it was just a bad idea to combine the second-order-methods of a modern gradient boosted trees implementation with quantile loss. Maybe in this case neural networks are actually a better choice, because they offer some flexibility regarding the optimization algorithm, and there are alternatives which require don't require the second derivative. But wait... LightGBM actually

offers quantile regression as a standard feature!. The LightGBM developer community found a way to solve the problem with a reasonable approximation that delivers a working quantile regression while keeping the speed benefits of second-order-optimization. As J. Mark Hou notes in his blog entry, the trick they used was first implemented in Scikit-learn's gradient boosted trees algorithm: to fix the problem of the vanishing second derivative, the values of the second derivate in the leaves of each tree (which are of course all 0) are replaced by the values of the loss function itself. As this only happens in the leaves, it is computationally rather cheap, and fixes at least those loss values that have the greatest importance for the overall results. It doesn't fix the loss function used for the splits in the tree, but that is acceptable, given that the splitting algorithms are rough heuristics anyway. A paper recently published in Nature is only one of several sources that report very good results using LightGBM's implementation of quantile regression.

Quantile regression everywhere: LightGBM saves the day

Finally, we've found a state-of-the-art implementation of gradient boosted trees that comes with the additional flexibility of quantile regression. Now it's your turn: Look at your models, and as a first step try to find out which could profit from replacing the mean with the median, which is less vulnerable to outliers. Once you've

gotten used to this new instrument, you may find you're seeing opportunities for different flavors of quantile regression everywhere.

Just give it a try!