Optimise main memory use with SAP BW on HANA

Main memory capacity is always a fascinating issue for SAP HANA and data warehouse scenarios when compared with ERP applications, or applications with relatively steady volumes of data. There is one key point to remember: make sure that you never run out of main memory space.

Sizing

A HANA database offers a number of interesting features not available with conventional database systems in terms of main memory sizing. On the one hand, the main memory is the central data storage location (conventional system: Disk -> Main memory, HANA: Main memory -> Moved to disk), but the main memory is also used for executing queries. The non-volatile memory therefore competes with the dynamic memory for queries. The SAP sizing basis for HANA databases takes account of this. In simplified terms, RAM = data footprint * 2 / 7. Sizing is based on the byte width of the columns * the number of rows expected. This figure is then multiplied by a factor of 2 so that half of the main memory is always available for queries, and divided by 7, as an average compression factor of 7 is assumed.

From experience, broad fact tables are particularly suitable for compression as their columns have low cardinality (i.e. few different values); compression rates of 30 are in some cases possible. Tables with high cardinality, for example incrementing IDs, will have a lower compression factor. This means that tables with fewer columns can suddenly occupy a lot more memory than very wide tables.

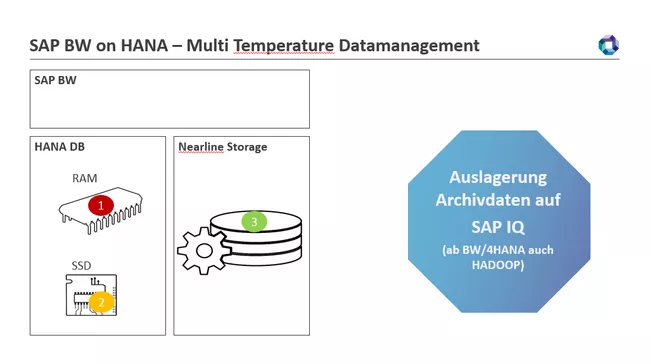

There are also a number of ways to reduce the use of main memory within HANA infrastructures to allow large quantities of data to be processed. Options with SAP BW on HANA: Moving data to SSD and integrating archive servers (nearline storage). Data can still be accessed, but no longer occupies space in the HANA database main memory.

1: HOT à data in the main memory

2: Warm à data on SSD in the HANA DB - early unload or extended tables (SAP HANA Dynamic Tiering)

3: Cold à data moved to NLS

Use of the main memory can be optimised even with a single HANA instance without extended storage (the data model is swapped to disk ), nearline storage, etc., so that even a data warehouse with multiple layers (for example an SAP BW with LSA++) does not store an unnecessary amount of data there. This allows you to load even environments with a 1TB data footprint to a 1TB HANA instance. The trick is the use and explicit control of the early unload.

Early Unload – get staging tables out of the memory!

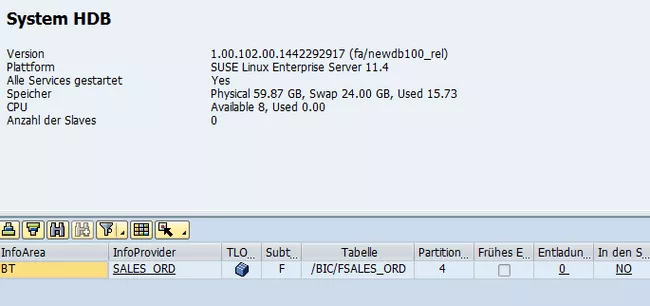

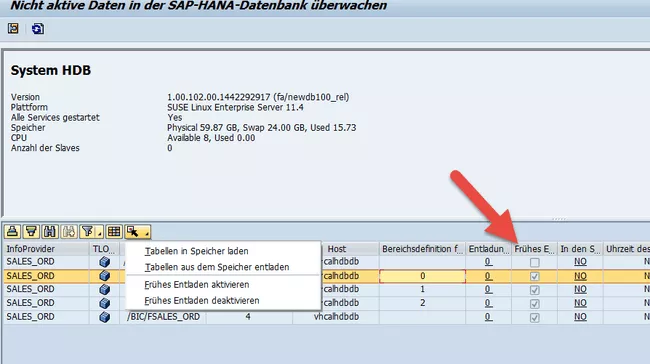

A HANA database moves tables from the main memory to physical storage (UNLOAD) on the basis of their priority. This means data is no long directly available for queries, but this does not matter for staging tables as these are, for example, frequently only required at night when a process chain is running. An SAP BW on HANA can therefore occupy more memory than recommended by SAP and still operate properly, as data in the main memory is automatically moved in the event of high memory pressure. The sequence in which data is moved is controlled on the basis of a priority for each table of an object in the transaction RSHDBMON. Certain SAP BW objects such as PSA tables, change logs of normal ADSOs and write-optimised ADSO objects already have the early unload flag set throughout or in certain areas by default in line with SAP's intended use.

For SAP BW objects, only priority 5 (active data for reporting) and 7 (early unload, inactive data such as change logs,…) can be set.

RSHDBMON transaction

Controlling the HANA database unload generally works well and without a significant impact on queries, as there is only write access to the physical storage and the queries are processed from the main memory. There is, however, one aspect where the automatic unload does not work ideally, namely the activation of very large requests. This is because all tables of the objects in question are loaded to the main memory. With a normal ADSO, these objects include the input table, active table and parts of the change log. Moreover, the majority of database operations are performed in a transaction and this puts significant strain on the main memory. An automatically triggered unload can often not be executed in time before rollback occurs and activation is cancelled. (See: SAP note 2399990 - How-To: Analyzing ABAP Short Dumps in SAP HANA Environments)

There are, however, two ways to support the HANA database:

1. Divide the load into small packages distributed over multiple DTPs with disjunctive filters (smaller database transactions)

2. All tables not required are proactively loaded from the memory (planned early unload)

Sample calculation:

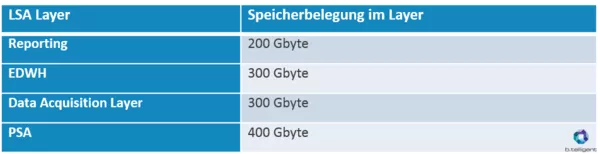

A HANA DB would therefore occupy the maximum amount of main memory for physical data storage. For a main memory of 512 Gbyte, this would be c. 460 Gbyte (the threshold for starting table unloading would be reached).

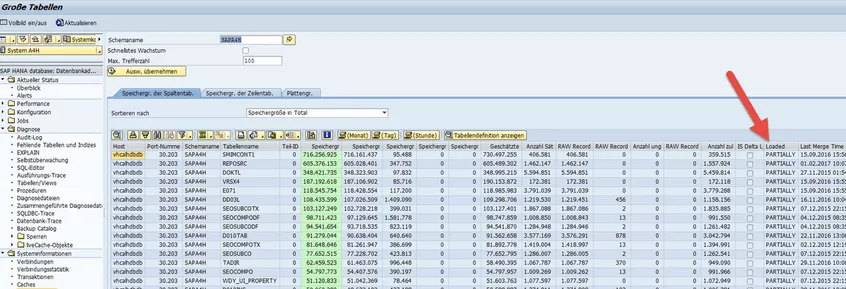

The PSA, data acquisition [SC1] layer and EDWH layer are only partly loaded. These usually already have the early unload flag set and are therefore rapidly cleared from the main memory. The status is indicated in the "Loaded" column of the transaction DB02.

If we now wanted to clear the main memory of all tables that have set an early unload flag, we would also unload all tables that the HANA DB optionally stores in the main memory. In simplified terms, we would in our example only now be using 200 Gbyte and the additional memory space would allow us to perform even major activations in the system. This procedure can also be used to reduce the load during the day. Please note, however, that this can increase the runtime of process chains, as the tables for staging may need to be loaded to the main memory again at night.

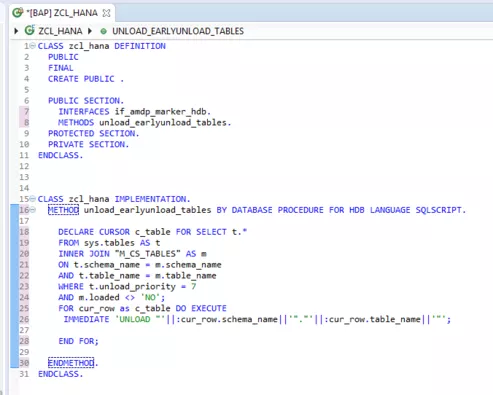

A planned early unload is very easy to implement with an ABAP Managed Stored Procedure, and can therefore be applied to the systems even with a standard transport system. Integration into process chains or reports is also easier to implement with ABAP integration.

To conclude, I would however stress that this procedure should only be employed in specific cases: to avoid separate handling in multiple DTPs in the case of full loads or to ensure system stability in the event of an unexpected increase in data. It is not a long-term solution, but is a good way of gaining time to prepare an infrastructure expansion or the archiving of historical data described above.